The 2nd Key to Unlocking a DevOps Culture: The vRad Development Pipeline

Welcome back to the vRad Technology Quest. At vRad, we value our ability to deliver frequent, regular, and minimally interruptive releases and today...

Remote radiologist jobs with flexible schedules, equitable pay, and the most advanced reading platform. Discover teleradiology at vRad.

Radiologist well-being matters. Explore how vRad takes action to prevent burnout with expert-led, confidential support through our partnership with VITAL WorkLife. Helping radiologists thrive.

Visit the vRad Blog for radiologist experiences at vRad, career resources, and more.

vRad provides radiology residents and fellows free radiology education resources for ABR boards, noon lectures, and CME.

Teleradiology services leader since 2001. See how vRad AI is helping deliver faster, higher-quality care for 50,000+ critical patients each year.

Subspecialist care for the women in your community. 48-hour screenings. 1-hour diagnostics. Comprehensive compliance and inspection support.

vRad’s stroke protocol auto-assigns stroke cases to the top of all available radiologists’ worklists, with requirements to be read next.

vRad’s unique teleradiology workflow for trauma studies delivers consistently fast turnaround times—even during periods of high volume.

vRad’s Operations Center is the central hub that ensures imaging studies and communications are handled efficiently and swiftly.

vRad is delivering faster radiology turnaround times for 40,000+ critical patients annually, using four unique strategies, including AI.

.jpg?width=1024&height=576&name=vRad-High-Quality-Patient-Care-1024x576%20(1).jpg)

vRad is developing and using AI to improve radiology quality assurance and reduce medical malpractice risk.

Now you can power your practice with the same fully integrated technology and support ecosystem we use. The vRad Platform.

Since developing and launching our first model in 2015, vRad has been at the forefront of AI in radiology.

Since 2010, vRad Radiology Education has provided high-quality radiology CME. Open to all radiologists, these 15-minute online modules are a convenient way to stay up to date on practical radiology topics.

Join vRad’s annual spring CME conference featuring top speakers and practical radiology topics.

vRad provides radiology residents and fellows free radiology education resources for ABR boards, noon lectures, and CME.

Academically oriented radiologists love practicing at vRad too. Check out the research published by vRad radiologists and team members.

Learn how vRad revolutionized radiology and has been at the forefront of innovation since 2001.

%20(2).jpg?width=1008&height=755&name=Copy%20of%20Mega%20Nav%20Images%202025%20(1008%20x%20755%20px)%20(2).jpg)

Visit the vRad blog for radiologist experiences at vRad, career resources, and more.

Explore our practice’s reading platform, breast imaging program, AI, and more. Plus, hear from vRad radiologists about what it’s like to practice at vRad.

Ready to be part of something meaningful? Explore team member careers at vRad.

9 min read

Brian (Bobby) Baker

:

June 20, 2017

Brian (Bobby) Baker

:

June 20, 2017

vRad’s philosophy around frequently deploying software updates relies heavily on test automation, which ensures adequate test coverage for each release. Test automation is one of the most challenging aspects of software development at vRad; and we’ve tackled it in a multi-pronged approach that is continually evolving.

|

Test Automation: Finding ways to test software in an automated fashion to increase breadth and depth of coverage. Basically, writing software to test software. |

We have two basic tenets at vRad for test automation:

I simply cannot repeat this frequently enough: test automation is not a replacement for manual tests and we place an extremely high value on manual testing at vRad.

Some organizations will adopt automated testing with the goal of eliminating manual tests. Perhaps they are trying to automate all manual tests or their managers use automation as a metric for success. vRad does not do that. We, quite strongly, believe that no matter how much automated testing is in place, having people dedicated to ensuring quality is of the utmost importance. This ranges from ensuring developers follow standards and processes, to spending a lot of time on exploratory testing.

Our test team at vRad includes manual testing. The team executes hundreds of tests for each monthly software release that test automation simply cannot perform, such as:

Basically, we tell our Software Quality Assurance (SQA) to break things!

And on the rare occasions when a bug does get into production, we do not blame SQA. All team members are responsible for quality.

Before we discuss the types of tests we have, and dive into specific patterns, it’s important to spend time discussing metrics. Automation in general has a tendency to appear frightening. I often hear concerns that automation will take jobs away; in my experience a significant amount of automation actually creates opportunities rather than takes them away. Automation isn’t simply a matter of automating manual work – it supplements manual work.

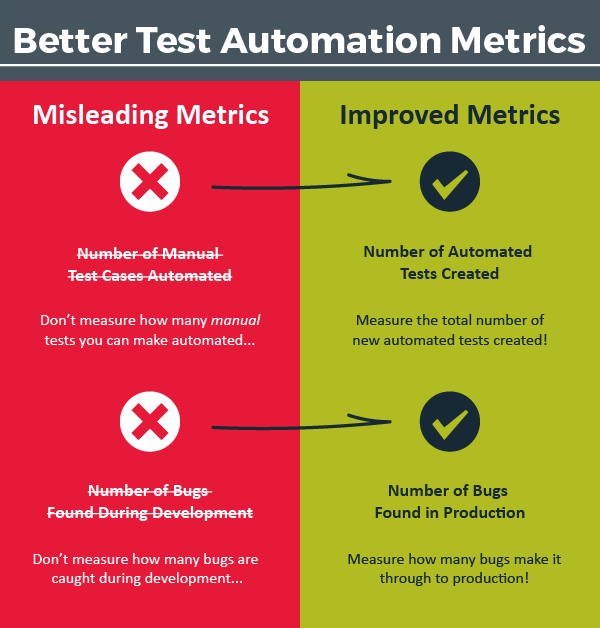

From my perspective, a significant amount of automation anxiety is caused by the usage of misleading metrics; two of these metrics that I see frequently are:

Measuring the number of manual test cases automated is very tempting – so tempting that even though I caution against using it, I capture this metric at vRad! The temptation is pretty simple: the goal of automation is to remove manual labor, so tracking how quickly we automate will show how fast we’re saving money!

I challenge that goal.

Approaching test automation with a goal of simply removing manual labor often backfires for myriad reasons:

At vRad our two primary goals with test automation are to:

While we do automate manual tests, replacing manual tests is not our primary objective – there are many manual tests our team may never automate.

I advise against managing to this metric, even if you choose to track it. At vRad, we do track how many manual test cases are automated – we go back and mark those tests as automated to avoid duplicate work – but we rarely chart or share it. We measure success through other metrics instead.

So, what does vRad measure?

A large number of our automated tests were never manual to begin with – at least not as a defined manual test. Many of our automated tests are focused on specific functions, pages, buttons, and fields; for instance, we might test that a text box for “First Name” accepts various characters, has a certain field length, etc. While manual testers test this, we often don’t get this granular in our written Test Cases. Instead, this typically would occur during exploratory testing – trying to break things!

By not managing to the number of manual tests automated, it may seem that we’re not freeing-up the SQA team at all. This is partly true – our main focus isn’t reducing the number of manual tests run, primarily because manual testers do a lot more than run defined manual tests. In addition, most of our testers, even long after they have exhausted running all the tests they can think of, still spend a lot of time performing exploratory testing. In a sense, we’re never done testing. By adding automated tests, we reduce manual testing in several core ways:

This metric is common for both manual testing and automated testing. And, again, this metric can be very tempting.

There’s a common piece of wisdom claiming that “the sooner bugs are found, the cheaper they are to fix.” For instance, if you can find a bug during design time, it will be much cheaper to adjust the design before building software than it will be to fix the bug once the software is already built.

This adage is partially correct. It is better to catch the bugs sooner rather than later. The flaw lies in the implication: if you focus on catching bugs early, you don’t have to test as much later.

Imagine that you are building a store application to sell toy cars. The team gets together through the various stages of development – requirements gathering, design, coding, feature testing – and works extra hard to make sure all bugs are worked out of the system as early as possible.

Regression of the application takes two days. There are two potential outcomes:

You see, regardless of finding a few bugs or not, regression needs to occur! The team will not know if there are bugs unless the application is tested. For this reason, it is frequently simpler to look at regression testing as a fixed cost; catching bugs early is a positive thing and does reduce cost, but the majority of the cost is fixed anyway!

At vRad, we choose not to manage around the number of bugs found during the development process – the teams themselves have a good instinct towards the quality of code being produced, if there are too many bugs from a certain developer or if a process change would help.

Instead of managing towards bugs found in development, we focus on…

We take the quality of our production environment very seriously – each bug that is found is tracked, the software engineering leadership reviews each one and then they discuss what can be done to avoid that bug (or type of bug) in the future. This often results in new test cases, additional test automation in specific areas or enabling better testing through new processes.

Bugs in production are an inevitability, but many can be avoided. By focusing our testing on the end product, we reward our team for polish instead of punishing them for the diligence of our SQA team finding bugs during development.

Let’s recap our improved metrics:

Now that we’ve established a few pitfalls to avoid, let’s take a deeper look at the actual tests we build at vRad.

Our automated tests are broken into three categories:

If you aren’t familiar with unit tests, the idea is to test a small chunk of code, without interacting with a large part of the platform; or rather just a single function. You might have a function called “GetText” that returns “Hello World”. In this case, a unit test might validate that the correct string is returned.

They’re designed to be fast. Developers generally run them on their PC as they develop. Unit tests also run continuously – often with every continuous integration (see how in vRad Development Pipeline (#2)).

vRad’s unit tests are fairly standard and there are lots of great resources on the internet if you are interested in learning more about unit testing (http://www.nunit.org/index.php?p=quickStart&r=2.6.4 , https://www.youtube.com/watch?v=1TPZetHaZ-A ).

Integration tests, unlike unit tests, test deeper into the platform, but generally do not interact with the UI. For example, these tests might test code that reaches into our databases; they might control a service running on a server to stop it and start it.

Because integration tests actually change data, we’ve developed a few fail safes for handling this mechanic. Some of our tests use a special copy of the databases that is restored for each test case – that way, each test has a known set of data. Many of our integration tests will create or update any necessary data required for the test. We have a rich set of APIs that enable us to control our data, drive our configurations, and use quite a bit of the software that makes up the platform.

There are many philosophies on the best ways to write automated tests, particularly when it comes to changing data. At vRad, each of our sub systems (Biz, RIS, PACS) has a different purpose and therefore vary in architecture and design. In our decision to write integration tests (in addition to unit tests), we leverage our wealth of APIs with test hooks to test as much of the platform as we can. Often, we find that unit testing alone isn’t sufficient to cover the depth of a software platform, so we utilize these integration tests to perform deeper, more substantial tests of the platform.

Finally, we have our Automated UI tests. Like many companies, vRad had a couple of false starts prior to success with automated UI tests. We use a product called Ranorex (http://www.ranorex.com/) to drive our tests. Our initial attempts relied quite a bit on “record and play” functionality. This is what it sounds like: a user will record actions and then that recording become the test – to run the test you simply replay the recording.

As might be expected, the record and playback method worked at first, when we had a small number of tests. But soon, the maintenance of those tests became unmanageable – there was too much flux inside of the applications to maintain the tests, which meant re-recording the processes. And the data we used for tests ended up being strewn across hundreds of modules of the recordings.

Our second attempt has proven to be more robust. We decided to utilize a “data-driven” approach based on a Page-Object design pattern. That’s a lot of buzzwords, so let me explain. First, we don’t do any record-and-play anymore. Instead, we break out applications into pages (or screens). For each page we develop a repository and an “object”; a repository is just the “paths” to each item, such as a button or a text box; and an “object” refers to a chunk of code that describes the page functions – the fields and buttons, what they do, what can be clicked, and so on and so forth.

We develop test patterns based on the actions that can be taken for each page. For instance, an order page might be able to fill out a name and other information and be submitted back to the server to create the order. The user might then land on another page that is a list of the orders she has made. Our test pattern would consist of those actions – filling out the information, submitting the order, and looking for the order on the list.

To test scenarios, we feed these test patterns data. Each data set given to a test pattern is considered a test case. It is easy to quickly have thousands of test cases. We manage this test data in a database with a small custom UI.

This approach is much more maintainable than record-and-play. In fact, we spend so little time maintaining the tests that it surprises us quite frequently. They simply work.

And recall that we don’t consider something an automated test unless it runs at least nightly. All of our UI based automated testing runs at night and each morning we triage these tests. Using the Page-Object patterns, we spend very little time actually doing triage and most of the day is spent continuing to build out our test automation for new pages and applications.

At vRad, quality is paramount, so fixing tests is too. We run our tests at least nightly – and often more frequently. Keeping them working can be a challenge at first – tests start out brittle and might break frequently; but as our team monitors the tests and fixes them, they become more resilient with each iteration.

We’ve been pleasantly surprised at vRad with the results of our vigilance. We now have thousands of tests that run at least nightly and simply work. It can be a daunting task to run, triage and fix tests to always pass; but the result of having stable tests is worth the effort!

Our automation efforts focus on measuring towards our values – adding more and more automated tests and reducing the number of bugs found in production. Test Automation at vRad is not about replacing manual tests or manual testers – rather, we deliver higher quality, faster, by increasing our testing depth and breadth through test automation.

Product, systems, environments, and code bases in general tend to gain complexity with time – a customer wants a configuration for this or the Ops team needs to be able to throttle that. Adding in robust test automation is a key component to how vRad scales with our customer’s needs and the features of our platform.

Like most organizations, we still do not have enough automated testing. We are continually asking ourselves how we can write more automated tests and how we can run our automated tests faster. But all the progress we’ve made has enabled us to improve our quality and release data more frequently. The investment is worth it!

Thanks for joining us on this journey through test automation, and stay tuned for our next post on vRad’s Build Tools (#5). And remember, we’ll be tracking all 7 keys to unlocking a DevOps Culture, as they’re released, in the vRad Technology Quest Log.

Until our next adventure,

Brian (Bobby) Baker

Welcome back to the vRad Technology Quest. At vRad, we value our ability to deliver frequent, regular, and minimally interruptive releases and today...

Today in the vRad technology quest we set forth to understand Dev (or test) Environments. Let’s jump right in.

At vRad, we have a passion for connecting – with each other, with clients, and with patients. In technology, we strive to break down the language...

vRad (Virtual Radiologic) is a national radiology practice combining clinical excellence with cutting-edge technology development. Each year, we bring exceptional radiology care to millions of patients and empower healthcare providers with technology-driven solutions.

Non-Clinical Inquiries (Total Free):

800.737.0610

Outside U.S.:

011.1.952.595.1111

3600 Minnesota Drive, Suite 800

Edina, MN 55435