Critical Relay Times and the Future of Stroke and Trauma Treatment

Today is our third and final post in deference to Stroke and Trauma Awareness Month:

Remote radiologist jobs with flexible schedules, equitable pay, and the most advanced reading platform. Discover teleradiology at vRad.

Radiologist well-being matters. Explore how vRad takes action to prevent burnout with expert-led, confidential support through our partnership with VITAL WorkLife. Helping radiologists thrive.

Visit the vRad Blog for radiologist experiences at vRad, career resources, and more.

vRad provides radiology residents and fellows free radiology education resources for ABR boards, noon lectures, and CME.

Teleradiology services leader since 2001. See how vRad AI is helping deliver faster, higher-quality care for 50,000+ critical patients each year.

Subspecialist care for the women in your community. 48-hour screenings. 1-hour diagnostics. Comprehensive compliance and inspection support.

vRad’s stroke protocol auto-assigns stroke cases to the top of all available radiologists’ worklists, with requirements to be read next.

vRad’s unique teleradiology workflow for trauma studies delivers consistently fast turnaround times—even during periods of high volume.

vRad’s Operations Center is the central hub that ensures imaging studies and communications are handled efficiently and swiftly.

vRad is delivering faster radiology turnaround times for 40,000+ critical patients annually, using four unique strategies, including AI.

.jpg?width=1024&height=576&name=vRad-High-Quality-Patient-Care-1024x576%20(1).jpg)

vRad is developing and using AI to improve radiology quality assurance and reduce medical malpractice risk.

Now you can power your practice with the same fully integrated technology and support ecosystem we use. The vRad Platform.

Since developing and launching our first model in 2015, vRad has been at the forefront of AI in radiology.

Since 2010, vRad Radiology Education has provided high-quality radiology CME. Open to all radiologists, these 15-minute online modules are a convenient way to stay up to date on practical radiology topics.

Join vRad’s annual spring CME conference featuring top speakers and practical radiology topics.

vRad provides radiology residents and fellows free radiology education resources for ABR boards, noon lectures, and CME.

Academically oriented radiologists love practicing at vRad too. Check out the research published by vRad radiologists and team members.

Learn how vRad revolutionized radiology and has been at the forefront of innovation since 2001.

%20(2).jpg?width=1008&height=755&name=Copy%20of%20Mega%20Nav%20Images%202025%20(1008%20x%20755%20px)%20(2).jpg)

Visit the vRad blog for radiologist experiences at vRad, career resources, and more.

Explore our practice’s reading platform, breast imaging program, AI, and more. Plus, hear from vRad radiologists about what it’s like to practice at vRad.

Ready to be part of something meaningful? Explore team member careers at vRad.

.png)

For the latest information on vRad’s Artificial Intelligence program please visit vrad.com/radiology-services/radiology-ai/

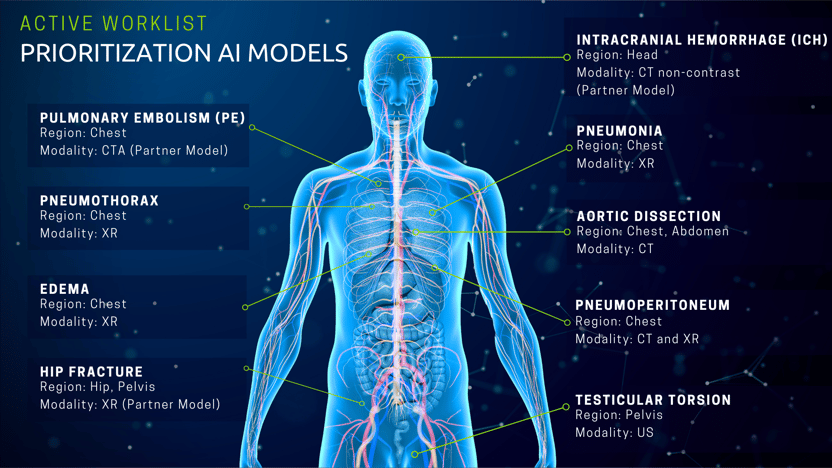

I am pleased to share that vRad has deployed two additional Artificial Intelligence (AI) models to our imaging platform, bringing the total to seven active models helping patients right now.

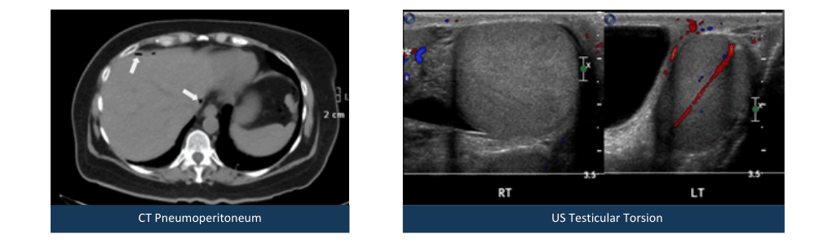

The first new model identifies pneumoperitoneum in chest CTs, and the second model identifies testicular torsion in ultrasound scans. Both conditions are critical, and timely diagnoses will have a positive impact on patient outcomes. As with all our innovation and product development, our models are immediately available to clients as part of our AI-enhanced on-the-ground and in-the-cloud radiology solutions.

The goal of our worklist prioritization AI models is to reduce time to patient care by reducing the overall radiology report turnaround time. There is a positive, sometimes dramatic, patient care impact from the use of these models. In another blog post, neuroradiologist Joshua Morias, MD, relayed how he was able to significantly improve the outcome for a stroke victim by responding to the case request in just 2.9 minutes – only later learning that had AI not prioritized the case to the top of his list, it may have been delayed in his queue for another 10 minutes.

vRad AI models can "look" at images and identify cases that need to be prioritized. When selecting pathologies for modeling, we focus on two criteria:

vRad AI models correctly prioritize about 75 emergent cases per day, reducing time to care by as much as 15 minutes. We also correctly re-prioritize about 10 cases per month that were ordered as non-emergent, reducing the time to care by up to 24 hours for patients with unknown, but potentially critical findings.

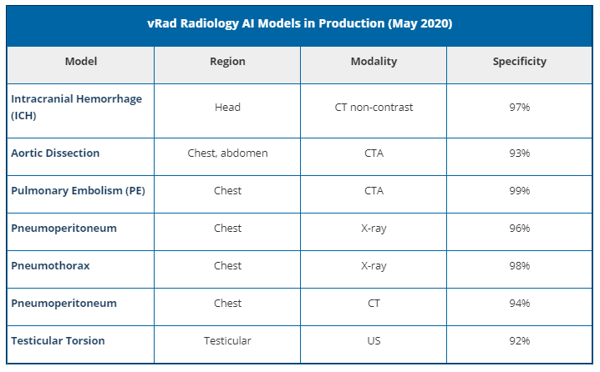

Model performance – how effectively a model can identify the presence (or lack) of a specific pathology – is measured in specificity and sensitivity. Sensitivity is the ability for the model to correctly identify the condition when present. Specificity measures the model's accuracy within those cases identified. In other words, a model with low sensitivity and high specificity will not identify all cases, but those identified are very likely to indicate the particular disease.

Once a model is deployed its performance is monitored on a regular basis. Sensitivity and specificity are not static numbers because radiology image data changes daily. Many factors contribute to these changes, for example, scan protocols change, equipment software is updated, hospitals merge, patient demographics shift, and other factors continually impact the imaging dataset. These dynamics will impact a model's performance over time. In the field of machine learning, the degradation in established and predictive performance is called drift.

vRad’s successful implementation of AI models into a radiology service is beholden to monitoring of sensitivity and specificity for drift. The model's performance is reviewed daily. If a model's performance crosses the threshold established by our medical leadership team, we will update the model accordingly to maintain performance standards. Below are our models’ specificities as of May 11, 2020:

|

|

|

The vRad technology team will continue to build, validate and deploy both natural language processing (NLP) models and image AI models to enhance our prioritization workflows. We are following an AI development path we simply call “the funnel”. The AI development funnel is a multi-year, strategic, phased approach to building and deploying AI models. It is designed to extract value as early as possible, then deploy models into higher value workflows as model performance allows. |

As we look ahead to the next wave of innovation and use-cases for image AI in radiology, the next phase will focus on using AI to enhance the quality assurance process to provide actionable data to radiologists and thereby affect true quality improvement.

The technology team has been working closely with medical leadership to define the “AI-enabled QA workflow” and build prototypes based on daily review of all cases that impact quality levels. Correlating natural language processing (NLP) results with image AI results is giving us a clear picture of how to automate and scale. An exciting first step, and a precursor to real-time interaction between AI and radiologists.

This is only the beginning of the amazing impact of AI on radiology. Our technology teams and clinical teams are on the frontline of technological evolution, pushing the boundaries of clinical innovation to support our clients and to deliver quality patient care quickly.

Back to Blog

Today is our third and final post in deference to Stroke and Trauma Awareness Month:

As a 24/7/365 radiology company, vRad’s technology is the backbone of everything we do. In order to deliver patient care, our client facilities must...

A growing number of health organizations are making computed tomographic perfusion (CTP) a critical part of their stroke intervention protocols.

vRad (Virtual Radiologic) is a national radiology practice combining clinical excellence with cutting-edge technology development. Each year, we bring exceptional radiology care to millions of patients and empower healthcare providers with technology-driven solutions.

Non-Clinical Inquiries (Total Free):

800.737.0610

Outside U.S.:

011.1.952.595.1111

3600 Minnesota Drive, Suite 800

Edina, MN 55435